While my focus has been primarily on academic publication, many of my

papers have been refined and later widely implemented in products and

commercial applications. For example, my work on spherical harmonic

lighting and irradiance environment maps

is now widely included in games (such as the Halo series), and is

increasingly adopted in movie production (being a critical component

of the rendering pipeline in Avatar in 2010, and now an integral part

of RenderMan 16, since mid-2011). These ideas are also being used by

Adobe for relighting, and are now included in many standard textbooks. My research on

importance sampling has inspired a sampling and image-based

lighting pipeline that is becoming standard for production

rendering (also included in RenderMan 16) and is used for example

on the Pixar movie, Monsters University

(my papers discussing production use methods are presented at

EGSR

2012 and the inaugural

JCGT paper).

Models for volumetric scattering have been used in demos by NVIDIA, and elsewhere

in industry. I also participated in developing the first

electronic field guide ; a subsequent iPhone app developed by Prof. Belhumeur and

colleagues is now widely used by the public for visual species identification.

Most recently, work on sampling and reconstruction for rendering (frequency analysis, adaptive wavelet rendering)

has inspired widespread use of Monte Carlo denoising in industry, and

been recognized as seminal in a EG STAR report. Subsequent work on real-time physically-based

rendering and denoising (axis-aligned filtering for soft shadows) has

inspired modern software and hardware real-time AI denoisers, which are now integrated into Optix5 and NVIDIA's RTX chips (2017, 18). As a result, physically-based (raytraced) rendering with denoising is now a reality in both offline and real-time rendering pipelines. Our new Fur Reflectance Model has been used for all animal fur in the 2017 movie War for the Planet of the Apes, nominated for a visual effects Oscar, while new glint models have been used in AutoDesk Fusion 360 and games.

Here is a selection of recent invited talks that give an overview of

research.

|

A Free-Space Diffraction BSDF

SIGGRAPH 2024

We derive an edge-based formulation of Fraunhofer diffraction, which is well suited to the common (triangular) geometric meshes used in computer graphics. Our method dynamically constructs a free-space diffraction BSDF by considering the geometry around the intersection point of a ray of light with an object, and we present an importance sampling strategy for these BSDFs.

Paper: PDF

Supplementary: PDF

|

|

Practical Error Estimation for Denoised Monte Carlo Image Synthesis

SIGGRAPH 2024

We present a practical global error estimation technique for Monte Carlo ray tracing combined with deep learning based denoising. Our method uses aggregated estimates of bias and variance to determine the squared error distribution of the pixels, and develops a stopping criterion for an error threshold.

Paper: PDF

|

|

Neural Geometry Fields for Meshes

SIGGRAPH 2024

We present Neural Geometry Fields, a neural representation fordiscrete surface geometry represented by triangle meshes. Our ideais to represent the target surface using a coarse set of quadrangularpatches, and add surface details using coordinate neural networksby displacing the patches.

Paper: PDF

Supplementary: PDF

Video: MP4

|

|

A Construct-Optimize Approach to Sparse View Synthesis without Camera Pose

SIGGRAPH 2024

In this paper, we leverage the recent 3D Gaussian splatting method to develop a novel construct-and-optimize method for sparse view synthesis without camera poses. Specifically, we construct a solution progressively by using monocular depth and projecting pixels back into the 3D world. During construction, we optimize the solution by detecting 2D correspondences between training views and the corresponding rendered images. We develop a unified differentiable pipeline for camera registration and adjustment.

Paper: PDF

Supplementary: PDF

Video: MP4

|

|

Neural Directional Encoding for Efficient and Accurate View-Dependent Appearance Modeling

CVPR 2024

We present Neural Directional Encoding (NDE), a view-dependent appearance encoding of neural radiance fields (NeRF) for rendering specular objects. NDE transfers the concept of feature-grid-based spatial encoding to the angular domain, significantly improving the ability to model high-frequency angular signals.

Paper: PDF

Supplementary: PDF

Video: MP4

|

|

What You See is What You GAN: Rendering Every Pixel for High-Fidelity Geometry in 3D GANs

CVPR 2024

In this work, we propose techniques to scale neural volume rendering to the much higher resolution of native 2D images, thereby resolving fine-grained 3D geometry with unprecedented detail. Our approach employs learning-based samplers for accelerating neural rendering for 3D GAN training using up to 5 times fewer depth samples. This enables us to explicitly render every pixel of the full-resolution image during training.

Paper: PDF Video: MP4

|

|

Lift3D: Zero-Shot Lifting of Any 2D Vision Model to 3D

CVPR 2024

In this paper, we ask the question of whether any 2D vision model can be lifted to make 3D consistent predictions. We answer this question in the affirmative; our new Lift3D method trains to predict unseen views on feature spaces generated by a few visual models (i.e. DINO and CLIP), but then generalizes to novel vision operators and tasks, such as style transfer, super-resolution, open vocabulary segmentation and image colorization; for some of these tasks, there is no comparable previous 3D method.

Paper: PDF

|

|

Importance Sampling BRDF Derivatives

TOG 2024

In differentiable rendering, BRDFs are replaced by their differential BRDF counterparts which are real-valued and can have negative values. Our work generalizes BRDF derivative sampling to anisotropic microfacet models, mixture BRDFs, Oren-Nayar, Hanrahan-Krueger, among other analytic BRDFs.

Paper: PDF

|

|

Decorrelating ReSTIR Samplers via MCMC Mutations

TOG 2024

We demonstrate how interleaving Markov Chain Monte Carlo (MCMC) mutations with reservoir resampling helps alleviate correlation issues, especially in scenes with glossy materials and difficult-to-sample lighting. Moreover, our approach does not introduce any bias, and in practice we find considerable improvement in image quality with just a single mutation per reservoir sample in each frame.

Paper: PDF Video: M4V Supplementary: PDF

|

|

Conditional Resampled Importance Sampling and ReSTIR

SIGGRAPH Asia 2023

Recent work on generalized resampled importance sampling (GRIS) enables importance-sampled Monte Carlo integration with random variable weights replacing the usual division by probability density. In this paper, we extend GRIS to conditional probability spaces, showing correctness given certain conditional independence between integration variables and their unbiased contribution weights. To show our theory has practical impact, we prototype a modified ReSTIR PT with a final gather pass. This reuses subpaths, postponing reuse at least one bounce along each light path.

Paper: PDF Video: MP4

|

|

Discontinuity-Aware 2D Neural Fields

SIGGRAPH Asia 2023

We construct a feature field that is discontinuous only across known discontinuity locations and smooth everywhere else, and finally decode the features into the signal value using a shallow multi-layer perceptron. We develop a new data structure based on a curved triangular mesh, and demonstrate applications in rendering, simulation and physics-informed neural networks.

Paper: PDF Video: MP4

|

|

OpenIllumination: A Multi-Illumination Dataset for Inverse Rendering Evaluation on Real Objects

NeurIPS 2023

We introduce OpenIllumination, a real-world dataset containing over 108K imagesof 64 objects with diverse materials, captured under 72 camera views and a large number of different illuminations. For each image in the dataset, we provide accurate camera parameters, illumination ground truth, and foreground segmentation masks. Our dataset enables the quantitative evaluation of most inverse rendering and material decomposition methods for real objects.

Paper: PDF

|

|

NeRFs: The Search for the Best 3D Representation

Article for ICBS Frontiers of Science Award 2023

At their core, NeRFs describe a new representation of 3D scenes or 3D geometry. as a continuous volume, with volumetric parameters like view-dependent radiance and volume density obtained by querying a neural network. In this article, we briefly review the NeRF representation, and describe the three decades-long quest to find the best 3D representation for view synthesis and related problems.

Paper: PDF

|

|

A Theory of Topological Derivatives for Inverse Rendering of Geometry

ICCV 2023

We introduce a theoretical framework for differentiable surface evolution that allows discrete topology changes through the use of topological derivatives for variational optimization of image functionals. While prior methods for inverse rendering of geometry rely on silhouette gradients for topology changes, such signals are sparse. In contrast, our theory derives topological derivatives that relate the introduction of vanishing holes and phases to changes in image intensity.

Paper: PDF

|

|

Factorized Inverse Path Tracing for Efficient and Accurate Material-Lighting Estimation

ICCV 2023

We propose a novel Factorized Inverse Path Tracing (FIPT) method which utilizes a factored light transport formulation and finds emitters driven by rendering errors.

Our algorithm enables accurate material and lighting optimization faster than previous work, and is more effective at resolving ambiguities.

Paper: PDF

Supplementary: PDF

|

|

Real-Time Radiance Fields for Single-Image Portrait View Synthesis

SIGGRAPH 2023

We present a one-shot method to infer and render a photorealistic 3D representation from a single unposed image (e.g., face portrait) in real-time. Given a single RGB input, our image encoder directly predicts a canonical triplane representation of a neural radiance field for 3D-aware novel view synthesis via volume rendering. Our method is fast (24 fps) on consumer hardware, and produces higher quality results than strong GAN-inversion baselines that require test-time optimization.

Paper: PDF

Video: MP4

|

|

NeuSample: Importance Sampling for Neural Materials

SIGGRAPH 2023

In this paper, we evaluate and compare various pdf-learning approaches for sampling spatially-varying neural materials, and propose new variations for three sampling methods: analytic-lobe mixtures, normalizing flows, and histogram predictions. Our versions of normalizing flows and histogram mixtures perform well and can be used in practical rendering systems for adoption of neural materials in production.

Paper: PDF

|

|

Parameter-Space ReSTIR for Differentiable and Inverse Rendering

SIGGRAPH 2023

We develop an algorithm to reuse Monte Carlo gradient samples between gradient iterations, motivated by reservoir-based temporal importance resampling in forward rendering. We reformulate differential rendering integrals in parameter space, developing a new resampling estimator that treats negative functions, and combines these ideas into a reuse algorithm for inverse texture optimization.

Paper: PDF

|

|

NerfDIFF: Single-image View Synthesis with NeRF-guided Distillation from 3D-aware Diffusion

ICML 2023

Novel view synthesis from a single image requires inferring occluded regions of objects and scenes whilst simultaneously maintaining semantic and physical consistency with the input. In this work, we propose NerfDiff, which addresses this issue by distilling the knowledge of a 3D-aware conditional diffusion model (CDM) into NeRF through synthesizing and refining a set of virtual views at test-time.

Paper: PDF

|

|

View Synthesis of Dynamic Scenes based on Deep 3D Mask Volume

PAMI 2023

We introduce a multi-view video dataset, captured with a custom 10-camera rig in 120FPS. The dataset contains 96 high-quality scenes showing various visual effects and human interactions in outdoor scenes. We develop a new algorithm, Deep 3D Mask Volume, which enables temporally-stable view extrapolation from binocular videos of dynamic scenes, captured by static cameras.

Paper: PDF

Video: MP4

|

|

PVP: Personalized Video Prior for Editable Dynamic Portraits using StyleGAN

EGSR 2023

In this work, our goal is to take as input a monocular video of a face, and create an editable dynamic portrait able to handle extreme head poses. The user can create novel viewpoints, edit the appearance, and animate the face. Our method utilizes pivotal tuning inversion (PTI) to learn a personalized video prior froma monocular video sequence. Then we can input pose and expression coefficients to MLPs and manipulate the latent vectors to synthesize different viewpoints and expressions of the subject.

Paper: PDF

Supplementary: PDF

Video: MP4

|

|

Neural Free-Viewpoint Relighting for Glossy Indirect Illumination

EGSR 2023

In this paper, we demonstrate a hybrid neural-wavelet PRT solution to high-frequency indirect illumination, including glossy reflection, for relighting with changing view. Specifically, we seek to represent the light transport function in the Haar wavelet basis. For global illumination, we learn the wavelet transport using a small multi-layer perceptron (MLP) applied to a feature field as a function of spatial location and wavelet index, with reflected direction and material parameters being other MLP inputs.

Paper: PDF

Video: MP4

|

|

MesoGAN: Generative Neural Reflectance Shells

CGF 2023 (Presented at EGSR 2023)

We introduce MesoGAN, a model for generative 3D neural textures. This new graphics primitive represents mesoscale appearance by combining the strengths of generative adversarial networks (StyleGAN) and volumetric neural field rendering.

Paper: PDF

Video: MP4

|

|

Vision Transformer for NeRF-Based View Synthesis from a Single Input Image

WACV 2023

We leverage both global and local features to form an expressive 3D representation for NeRF-Based view synthesis from a single image. The global features are learned from a vision transformer, while the local features are extracted from a 2D convolutional network.

Paper: PDF

|

|

A Level Set Theory for Neural Implicit Evolution under Explicit Flows

(Best Paper Honorable Mention) ECCV 2022

We present a framework that allows applying deformation operations defined for triangle meshes onto neural implicit surfaces. Our method uses the flow field to deform parametric implicit surfaces by extending the classical theory of level sets.

Paper: PDF

Project: Code/Video

|

|

Physically-Based Editing of Indoor Scene Lighting from a Single Image

ECCV 2022

We present a method to edit complex indoor lighting from a single image. We tackle this problem using novel components: a holistic scene reconstruction method that estimates reflectance and parametric 3D lighting, and a neural rendering framework that re-renders the scene from our predictions. We enable light source insertion, removal and replacement.

Paper: PDF

Project: Code/Video

|

|

Covector Fluids

SIGGRAPH 2022

We propose a new velocity-based fluid solver derived from a reformulated Euler equation using covectors. Our method generates rich vortex dynamics by an advection process that respects the Kelvin circulation theorem. The numerical algorithm requires only a small local adjustment to existing advection-projection methods and can easily leverage recent advances therein. The resulting solver emulates a vortex method without the expensive conversion between vortical variables and velocities.

Paper: PDF

Video: MP4 YouTube Code: ZIP

|

|

Rendering Neural Materials on Curved Surfaces

SIGGRAPH 2022

The goal of this paper is to design a neural material representation capable of correctly handling silhouette effects. We extend the neural network query to take surface curvature information as input, while the query output is extended to return a transparency value in addition to reflectance. We train the new neural representation on synthetic data that contains queries spanning a variety of surface curvatures. We show an ability to accurately represent complex silhouette behavior that would traditionally require more expensive and less flexible techniques, such as on-the-fly geometry displacement or ray marching.

Paper: PDF

Video: MP4

|

|

Spatiotemporal Blue Noise Masks

EGSR 2022

We propose novel blue noise masks that retain high quality blue noise spatially, yet when animated produce values at each pixel that are well distributed over time. By extending spatial blue noise to spatiotemporal blue noise, we overcome the convergence limitations of prior blue noise works, enabling new applications for blue noise distributions.

Paper: PDF

Supplementary: PDF

Code and Data: Github Link

|

|

NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis

CACM 2022

We present a method that achieves state-of-the-art results for synthesizing

novel views of complex scenes by optimizing an underlying continuous

volumetric scene function using a sparse set of input views. Our algorithm

represents a scene using a fully connected (nonconvolutional) deep network,

whose input is a single continuous 5D coordinate.

Paper: PDF

ECCV 20: PDF Video: MP4 YouTube

|

|

Learning Neural Transmittance for Efficient Rendering of Reflectance Fields

BMVC 2021

We propose a novel method based on precomputed Neural Transmittance Functions to accelerate the rendering of neural reflectance fields. Our neural transmittance functions enable us to efficiently query the transmittance at an arbitrary point in space along an arbitrary ray without tedious ray marching, which effectively reduces the time-complexity of the rendering by up to two orders of magnitude.

Paper: PDF

|

|

Differential Time-Gated Rendering

SIGGRAPH Asia 2021

In this paper, we introduce a new theory of differentiable time-gated

rendering that enjoys the generality of differentiating with respect to

arbitrary scene parameters. Our theory also allows the design of

advanced Monte Carlo estimators capable of handling cameras

with near-delta or discontinuous time gates.

Paper: PDF

Supplementary: ZIP

|

|

Deep 3D Mask Volume for View Synthesis of Dynamic Scenes

ICCV 2021

The next key step in immersive virtual experiences is view synthesis of

dynamic scenes. However, several challenges exist due to the lack of

high quality training datasets, and the additional time dimension for

videos of dynamic scenes. To address this issue, we introduce a

multi-view video dataset, captured with a custom 10-camera rig in

120FPS. The dataset contains 96 high-quality scenes showing various

visual effects and human interactions in outdoor scenes. We develop a

new algorithm, Deep 3D Mask Volume, which enables temporally stable view

extrapolation from binocular videos of dynamic scenes, captured by

static cameras.

Paper: PDF

Video: MP4

|

|

Modulated Periodic Activations for Generalizable Local Functional Representations

ICCV 2021

We present a new representation that generalizes to multiple instances

and achieves state-of-the-art fidelity. We use a dual-MLP

architecture to encode the signals. A synthesis network creates a

functional mapping from a low-dimensional input (e.g., pixel-position)

to the output domain (e.g. RGB color). A modulation network maps a

latent code corresponding to the target signal to parameters that

modulate the periodic activations of the synthesis network. We also

propose a local functional representation which enables generalization.

Paper: PDF

|

|

NeuMIP: Multi-Resolution Neural Materials

SIGGRAPH 2021

We propose NeuMIP, a neural method for representing and rendering a variety of material appearances at different scales. Classical prefiltering (mipmapping) methods work well on simple material properties such as diffuse color, but fail to generalize to normals, self-shadowing, fibers or more complex structures and reflectances. We develop mipmap pyramids of neural textures to address this problem, along with neural offsets.

Paper: PDF

Video: MP4

|

|

Deep Relightable Appearance Models for Animatable Faces

SIGGRAPH 2021

We present a method for building high-fidelity animatable 3D face models that can be posed and rendered with novel lighting environments in real-time. We first train an expensive but generalizable model on point-light illuminations, and use it to generate a training set of high-quality synthetic face images under natural illumination conditions. We then train an efficient model on this augmented dataset.

Paper: PDF

Video: MP4

|

|

Kelvin Transformations for Simulations on Infinite Domains

SIGGRAPH 2021

We introduce a general technique to transform a PDE problem on an unbounded

domain to a PDE problem on a bounded domain. Our method uses the Kelvin

Transform, which essentially inverts the distance from the origin.

We factor the desired solution into the product of an analytically known

asymptotic component and another function to solve for, demonstrating

Poisson, Laplace and Helmholtz problems.

Paper: PDF

Video: MP4

|

|

Hierarchical Neural Reconstruction for Path Guiding Using Hybrid Path and Photon Samples

SIGGRAPH 2021

We present a hierarchical neural path guiding framework which uses both path and photon samples to reconstruct high-quality sampling distributions. Uniquely, we design a neural network to directly operate on a sparse quadtree, which regresses a high-quality hierarchical sampling distribution. Our novel hierarchical framework enables more fine-grained directional sampling with less memory usage, effectively advancing the practicality and efficiency. This is the

follow-up for the Photon-Driven Neural Reconstruction paper below.

Paper: PDF

Supplementary: PDF

|

|

Photon-Driven Neural Reconstruction for Path Guiding

TOG 2021

We present a novel neural path guiding approach that can reconstruct

high-quality sampling distributions for path guiding from a sparse set

of samples, using an offline trained neural network. We

leverage photons traced from light sources as the primary input for

sampling density reconstruction, which is effective for challenging

scenes with strong global illumination. This is the precursor for the

Hierarchical Neural Reconstruction paper above.

Paper: PDF

Supplementary: PDF

|

|

Vectorization for Fast, Analytic and Differentiable Visibility

TOG 2021

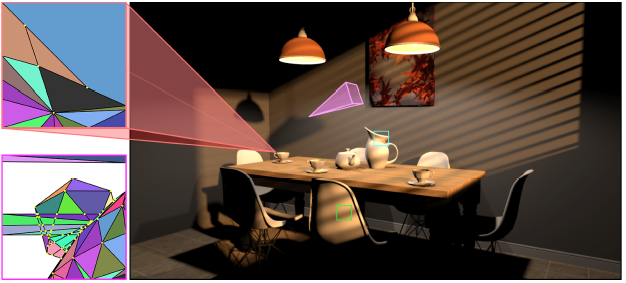

We develop a new rendering method, vectorization, that computes analytic solutions to 2D point-to-region integrals in conventional ray tracing and rasterization pipelines. Our approach revisits beam tracing and maintains all the visible regions formed by intersections and occlusions in the beam (shown leftmost for primary visibility and shadows).

Paper: PDF

MP4

|

|

Neural Light Transport for Relighting and View Synthesis

TOG 2021

We propose a semi-parametric approach for learning a neural representation of

the light transport of a scene. The light transport is embedded in a texture

atlas of known but possibly rough geometry. We model all non-diffuse and

global light transport as residuals added to a physically-based diffuse

base rendering.

Paper: PDF

MP4

|

|

NeLF: Neural Light-Transport Field for Portrait View Synthesis and Relighting

EGSR 2021

We present a system for portrait view synthesis and relighting: given multiple portraits, we use a neural network to predict the light-transport field in 3D space, and from the predicted Neural Light-transport Field (NeLF) produce a portrait from a new camera view under a new environmental lighting.

Paper: PDF

MOV

|

|

Human Hair Inverse Rendering using Multi-View Photometric Data

EGSR 2021

We introduce a hair inverse rendering framework to reconstruct high-fidelity 3D geometry of human hair, as well as its reflectance,which can be readily used for photorealistic rendering of hair. We demonstrate the accuracy and efficiency of our method using photorealistic synthetichair rendering data.

Paper: PDF

MOV

|

|

Uncalibrated, Two Source Photo-Polarimetric Stereo

PAMI 2021

In this paper we present methods for estimating shape from polarisation and

shading information, i.e. photo-polarimetric shape estimation, under varying,

but unknown, illumination, i.e. in an uncalibrated scenario. We propose

several alternative photo-polarimetric constraints that depend upon the partial

derivatives of the surface and show how to express them in a unified system of

partial differential equations of which previous work is a special case.

Paper: PDF

|

|

OpenRooms: An Open Framework for Photorealistic Indoor Scene Datasets

CVPR 2021

We propose a novel framework for creating large-scale photorealistic

datasets of indoor scenes, with ground truth geometry, material, lighting

and semantics. Our goal is to make the dataset creation process widely

accessible, transforming scans into photorealistic datasets with high-quality

ground truth.

Paper: PDF

Supplementary

MP4

|

|

Real-Time Selfie Video Stabilization

CVPR 2021

We propose a novel real-time selfie video stabilization method that is completely automatic and runs at 26fps. We use a 1D linear convolutional network to directly infer the rigid moving least squares warping which implicitly balances between the global rigidity and local flexibility. We also collect a selfie video dataset with 1005 videos for evaluation.

Paper: PDF

Supplementary

MP4

Code+Data

|

|

Neural Reflectance Fields for Appearance Acquisition

arXiv 2020

We present Neural Reflectance Fields, a novel deep scene representation that encodes volume density, normal and reflectance properties at any 3D point in a scene using a fully-connected neural network. We combine this representation with a physically-based differentiable ray marching framework that can render images from a neural reflectance field under any viewpoint and light. We demonstrate that neural reflectance fields can be estimated from images captured with a simple collocated camera-light setup.

Paper: PDF MP4

|

|

Fourier Features Let Networks Learn High Frequency Functions in Low Dimensional Domains

NeurIPS 2020

We show that passing input points through a simple Fourier feature mapping enables a multilayer perceptron (MLP) to learn high-frequency functions in low-dimensional problem domains. These results shed light on recent advances in computer vision and graphics that achieve state-of-the-art results by using MLPs for complex 3D objects and scenes.

Paper: PDF

|

|

Light Stage Super-Resolution: Continuous High-Frequency Relighting

SIGGRAPH Asia 2020

This paper proposes a learning-based solution for the super-resolution of scans of human faces taken from a light stage. Given an arbitrary query light direction, our method aggregates the captured images corresponding to neighboring lights in the stage, and uses a neural network to synthesize a rendering of the face that appears to be illuminated by a virtual light source at the query location.

Paper: PDF Video: MP4

|

|

Analytic Spherical Harmonic Gradients for Real-Time Rendering with Many Polygonal Area Lights

SIGGRAPH 2020

In this paper, we develop a novel analytic formula for the spatial gradients of the spherical harmonic coefficients for uniform polygonal area lights. The result is a significant generalization, involving the Reynolds transport theorem to reduce the problem to a boundary integral for which we derive a new analytic formula.

Paper: PDF Video: MP4

|

|

NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis

(Best Paper Honorable Mention)

ECCV 2020

We synthesize novel views of complex scenes by optimizing an underlying continuous volumetric scene function using a sparse set of input views. Our algorithm represents a scene using a fully-connected (non-convolutional) deep network whose input is a single continuous 5D coordinate. We use classic differentiable volume rendering to create images from this representation.

Paper: PDF Video: MP4 YouTube

|

|

Deep Reflectance Volumes: Relightable Reconstructions from Multi-View Photometric Images

ECCV 2020

We develop a novel volumetric scene representation for reconstruction from unstructured images. Our representation consists of opacity, surface normal and reflectance voxel grids. We present a novel physically-based differentiable volume ray marching framework to render these scene volumes under arbitrary viewpoint and lighting.

Paper: PDF Video: MP4

|

|

Deep Multi Depth Panoramas for View Synthesis

ECCV 2020

We propose a learning-based approach for novel view synthesis for multi-camera 360 degree panorama capture rigs. We present a novel scene representation, Multi Depth Panorama (MDP), that consists of multiple RGBD alpha panoramas that represent both scene geometry and appearance.

Paper: PDF Video: MP4

|

|

Deep Kernel Density Estimation for Photon Mapping

EGSR 2020

We present a novel learning-based photon mapping method that can be used to synthesize photrealistic images with detailed caustics from very sparse photons for scenes with complex diffuse-specular interactions. This is the first deep learning method for denoising particle-based rendering, and can produce global illumination effects like caustics with an order of magnitude fewer photons compared to previous photon mapping methods.

Paper: PDF

|

|

3D Mesh Processing using GAMer 2 to enable reaction-diffusion simulations in realistic cellular geometries

PLOS Computational Biology 2020

Recent advances in electron microscopy have enabled the imaging of

single cells in 3D at nanometer length scale resolutions. An uncharted

frontier for in silico biology is the ability to simulate cellular

processes using these observed geometries. In this paper, we describe

the use of our recently rewritten mesh processing software, GAMer 2,

to bridge the gap between poorly conditioned meshes generated from

segmented micrographs and boundary marked tetrahedral meshes which are

compatible with simulation.

Paper: PDF

|

|

Inverse Rendering for Complex Indoor Scenes: Shape, Spatially-Varying Lighting

and SVBRDF from a Single Image

CVPR 2020

We propose a deep inverse rendering framework for indoor scenes. From a single RGB image of an arbitrary indoor scene, we obtain a complete scene reconstruction, estimating shape, spatially-varying lighting, and spatially-varying, non-Lambertian surface reflectance. Our novel inverse rendering network incorporates physical insights.

Paper: PDF

|

|

Deep Stereo using Adaptive Thin Volume Representation with Uncertainty Awareness

CVPR 2020

We present Uncertainty-aware Cascaded Stereo Network (UCS-Net) for 3D reconstruction from multiple RGB images. We propose adaptive thin volumes (ATVs) for multi-view stereo (MVS). In an ATV, the depth hypothesis of each plane is spatially-varying, which adapts to the uncertainties of previous per-pixel depth predictions.

Paper: PDF

|

|

Learning Video Stabilization Using Optical Flow

CVPR 2020

We propose a novel neural network that infers the per-pixel warp fields for video stabilization from the optical flow fields of the input video. We also propose a pipeline that uses optical flow principal components for motion inpainting and warp field smoothing. Our method gives a 3x speed improvement compared to previous optimization methods.

Paper: PDF Video: MP4

|

|

Deep 3D Capture: Geometry and Reflectance from Sparse Multi-View Images

CVPR 2020

We introduce a novel learning-based method to reconstruct the high-quality geometry and complex, spatially-varying BRDF of an arbitrary object from a sparse set of only six images captured by wide-baseline cameras under collocated point lighting. We construct high-quality geometry and per-vertex BRDFs.

Paper: PDF Video: MP4

|

|

Deep Recurrent Network for Fast and Full-Resolution Light Field Deblurring

Sig. Proc. Letters 2019

We propose a novel light field recurrent deblurring network that is trained under 6 degree-of-freedom camera motion-blur model. By combining the real light field captured using Lytro Illum and synthetic light field rendering of 3D scenes from UnrealCV, we provide a large-scale blurry light field dataset to train the network.

Paper: PDF

|

|

A Differential Theory of Radiative Transfer

SIGGRAPH Asia 2019

We introduce a differential theory of radiative transfer, which

shows how individual components of the radiative transfer equation

(RTE) can be differentiated with respect to arbitrary differentiable

changes of a scene. Our theory encompasses the same generality as the

standard RTE, allowing differentiation while accurately handling a

large range of light transport phenomena such as volumetric absorption

and scattering, anisotropic phase functions, and heterogeneity.

Paper: PDF

|

|

Learning Generative Models for Rendering Specular Microgeometry

SIGGRAPH Asia 2019

Rendering specular material appearance is a core problem of

computer graphics. We propose a novel direction: learning the

high-frequency directional patterns from synthetic or measured

examples, by training a generative adversarial network (GAN). A key

challenge in applying GAN synthesis to spatially-varying BRDFs is

evaluating the reflectance for a single location and direction without

the cost of evaluating the whole hemisphere. We resolve this using a

novel method for partial evaluation of the generator network.

Paper: PDF

Video: MP4

|

|

Deep CG2Real: Synthetic-to-Real Translation via Image Disentanglement

ICCV 2019

We present a method to improve the visual realism of low-quality,

synthetic images, e.g. OpenGL renderings. Training an unpaired

synthetic-to-real translation network in image space is severely

under-constrained and produces visible artifacts. Instead, we propose

a semi-supervised approach that operates on the disentangled shading

and albedo layers of the image. Our two-stage pipeline first learns to

predict accurate shading in a supervised fashion using physically-based

renderings as targets, and further increases the realism of the

textures and shading with an improved CycleGAN network.

Paper: PDF

|

|

Selfie Video Stabilization

PAMI 2019

We propose a novel algorithm for stabilizing selfie videos. Our goal

is to automatically generate stabilized video that hasoptimal smooth

motion in the sense of both foreground and background. The key insight

is that non-rigid foreground motion in selfievideos can be analyzed

using a 3D face model, and background motion can be analyzed using

optical flow.

Paper: PDF

Video (ECCV 18): MP4

|

|

Deep View Synthesis from Sparse Photometric Images

SIGGRAPH 2019

In this paper, we synthesize novel viewpoints across a wide range of

viewing directions (covering a 60 degree cone) from a sparse set of

just six viewing directions. Our method is based on a deep

convolutional network trained to directly synthesize new views from the

six input views. This network combines 3D convolutions on a plane

sweep volume with a novel per-view per-depth plane attention map

prediction network to effectively aggregate multi-view appearance.

Paper: PDF

Supplementary: PDF

Video: MOV

|

|

Local Light Field Fusion: Practical View Synthesis with Prescriptive Sampling Guidelines

SIGGRAPH 2019

We present a practical and robust deep learning solution for capturing

and rendering novel views of complex real world scenes for virtual

exploration. We propose an algorithm for view synthesis from an

irregular grid of sampled views that first expands each sampled view

into a local light field via a multiplane image (MPI)

scene representation, then renders novel views by blending adjacent

local lightfields. We extend traditional plenoptic sampling theory to

derive a bound that specifies precisely how densely users should sample

views of a given scene when using our algorithm.

Paper: PDF

Video: YouTube

Code: Project

|

|

Accurate Appearance Preserving Filtering for Rendering Displacement-Mapped Surfaces

SIGGRAPH 2019

In this paper, we introduce a new method that prefilters displacement

maps and BRDFs jointly and constructs SVBRDFs at reduced

resolutions. These SVBRDFs preserve the appearance of the input models

by capturing both shadowing-masking and interreflection effects.

Further, we show that the 6D scaling function can be factorized into a

2D function of surface location and a 4D function of direction. By

exploiting the smoothness of these functions, we develop a simple and

efficient factorization method that does not require computing the full

scaling function.

Paper: PDF

Video: MP4

Slides: PPTX

Code and Data: ZIP

|

|

Single Image Portrait Relighting

SIGGRAPH 2019

We present a system for portrait relighting: a neural network that

takes as input a single RGB image of a portrait taken with a standard

cellphone camera in an unconstrained environment, and from that image

produces a relit image of that subject as though it were illuminated

according to any provided environment map.

Paper: PDF

Supplementary: PDF

Video: M4V

|

|

Pushing the Boundaries of View Extrapolation with Multiplane Images

CVPR 2019 Best Paper Finalist

We present a theoretical analysis showing how the range of views that

can be rendered from a multi-plane image (MPI) increases linearly with

the MPI disparity sampling frequency, as well as a novel MPI

prediction procedure that theoretically enables view extrapolations of

up to 4x the lateral viewpoint movement allowed by prior work.

Paper: PDF

Video: MP4

YouTube: Link With Appendices: PDF

|

|

Robust Video Stabilization by Optimization in CNN Weight Space

CVPR 2019

We directly model the appearance change as

the dense optical flow field of consecutive frames, which leads to a

large scale non-convex problem. By solving the problem in the CNN

weight space rather than directly for image pixels, we make it a

viable formulation for video stabilization. Our method trains the CNN

from scratch on each specific input example, and intentionally

overfits to produce the best result.

Paper: PDF

Video: MP4

|

|

Deep HDR Video from Sequences with Alternating Exposures

Eurographics 2019

A practical way to generate a high dynamic range (HDR) video using

off-the-shelf cameras is to capture a sequence with alternating

exposures and reconstruct the missing content at each

frame. Unfortunately, existing approaches are typically slow and are

not able to handle challenging cases. In this paper, we propose a

learning-based approach to address this difficult problem. To do this,

we use two sequential convolutional neural networks (CNN) to model the

entire HDR video reconstruction process.

Paper: PDF

Video: MP4

|

|

Analysis of Sample Correlations for Monte Carlo Rendering

Eurographics 2019

Monte Carlo integrators sample the integrand at specific sample point locations. The distribution of these sample points determines convergence rate and noise in the final renderings. The characteristics of such distributions can be uniquely represented in terms of correlations of sampling point locations. We aim to provide a comprehensible and accessible overview of techniques developed over the last decade to analyze such correlations.

Paper: PDF

|

|

Connecting Measured BRDFs to Analytic BRDFs by Data-Driven Diffuse-Specular Separation

SIGGRAPH Asia 2018

We propose a novel framework for connecting measured and analytic

BRDFs, by separating a measured BRDF into diffuse and specular

components. This enables measured BRDF editing, a compact measured

BRDF model, and insights in relating measured and analytic BRDFs. We

also design a robust analytic fitting algorithm for two-lobe

materials.

Paper: PDF

Supplementary: PDF

|

|

Learning to Reconstruct Shape and Spatially-Varying Reflectance from a

Single Image

SIGGRAPH Asia 2018

We demonstrate that we can recover non-Lambertian, spatially-varying BRDFs

and complex geometry belonging to any arbitrary shape class, from a

single RGB image captured under a combination of unknown environment

illumination and flash lighting. We achieve this by training a deep

neural network to regress shape and reflectance from the image. We

incorporate an in-network rendering layer that includes global illumination.

Paper: PDF

Video: MOV

Supplementary: PDF

|

|

Height-from-Polarisation with Unknown Lighting or Albedo

PAMI Aug 2018

We present a method for estimating surface height directly from a

single polarisation image simply by solving a large, sparse system of

linear equations. Our method is applicable to dielectric objects exhibiting

diffuse and specular reflectance, though lighting and albedo must be known.

We relax this requirement by showing that either spatially varying albedo or

illumination can be estimated from the polarisation image alone using

nonlinear methods. We believe that our method is the first passive, monocular

shape-from-x technique that enables well-posed height estimation with

only a single, uncalibrated illumination condition.

Paper: PDF

|

|

Selfie Video Stabilization

ECCV 2018

We propose a novel algorithm for stabilizing selfie videos. Our goal

is to automatically generate stabilized video that has optimal smooth

motion in the sense of both foreground and background. The key insight

is that non-rigid foreground motion in selfie videos can be analyzed

using a 3D face model, and background motion can be analyzed using

optical flow.

Paper: PDF

Video: MP4

|

|

Rendering Specular Microgeometry with Wave Optics

SIGGRAPH 2018

We design the first rendering algorithm based on a wave optics model,

but also able to compute spatially-varying specular highlights with

high-resolution detail. We compute a wave optics reflection integral

over the coherence area; our solution is based on approximating the

phase-delay grating representation of a micron-resolution surface

heightfield using Gabor kernels. Our results show both

single-wavelength and spectral solution to reflection from common

everyday objects, such as brushed, scratched and bumpy metals.

Paper: PDF

Video: MP4

Supplementary: Supplementary

|

|

Deep Image-Based Relighting from Optimal Sparse Samples

SIGGRAPH 2018

We present an image-based relighting method that can synthesize scene

appearance under novel, distant illumination from the visible

hemisphere, from only five images captured under pre-defined

directional lights. We show that by combining a custom-designed

sampling network with the relighting network, we can jointly learn

both the optimal input light directions and the relighting function.

Paper: PDF

Video: MOV

Project: Code, Data

|

|

Analytic Spherical Harmonic Coefficients for Polygonal Area Lights

SIGGRAPH 2018

We present an efficient closed-form solution for projection of uniform

polygonal area lights to spherical harmonic coefficients of arbitrary

order, enabling easy adoption of accurate area lighting in PRT systems,

with no modifications required to the core PRT framework. Our method

only requires computing zonal harmonic (ZH) coefficients, for which we

introduce a novel recurrence relation.

Paper: PDF

Video: MOV

Project: Code, Data

|

|

Deep Adaptive Sampling for Low Sample Count Rendering

EGSR 2018

Recently, deep learning approaches have proven successful at removing

noise from Monte Carlo (MC) rendered images at extremely low sampling

rates, e.g., 1-4 samples per pixel (spp). While these methods provide

dramatic speedups, they operate on uniformly sampled MC rendered

images. We address this issue by proposing a deep learning

approach for joint adaptive sampling and reconstruction of MC rendered

images with extremely low sample counts.

Paper: PDF

|

|

Deep Hybrid Real and Synthetic Training for Intrinsic Decomposition

EGSR 2018

Intrinsic image decomposition is the process of separating the

reflectance and shading layers of an image. In this paper, we propose

to systematically address this problem using a deep convolutional

neural network (CNN). In addition to directly supervising the network

using synthetic images, we train the network by enforcing it to

produce the same reflectance for a pair of images of the same

real-world scene with different illuminations. Furthermore, we improve

the results by incorporating a bilateral solver layer into our system

during both training and test stages.

Paper: PDF

|

|

Image to Image Translation for Domain Adaptation

CVPR 2018

We propose a general framework for unsupervised domain adaptation,

which allows deep neural networks trained on a source domain to be

tested on a different target domain without requiring any training

annotations in the target domain. We apply our method for domain

adaptation between MNIST, USPS, and SVHN datasets, and Amazon, Webcam

and DSLR Office datasets in classification tasks, and also between

GTA5 and Cityscapes datasets for a segmentation task. We demonstrate

state of the art performance on each of these datasets.

Paper: PDF

|

|

Learning to See through Turbulent Water

WACV 2018

This paper proposes training a deep convolution neural network to

undistort dynamic refractive effects using only a single image.

Unlike prior works on

water undistortion, our method is trained end-to-end, only requires a

single image and does not use a ground truth template at test time.

Paper: PDF

Project: Code/Data

|

|

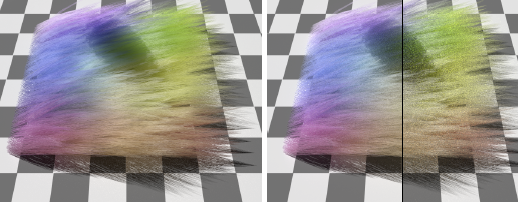

A BSSRDF Model for

Efficient Rendering of Fur with Global Illumination

SIGGRAPH Asia 2017

We present the first global illumination model, based on dipole

diffusion for subsurface scattering, to approximate light bouncing

between individual fur fibers. We model complex light and fur

interactions as subsurface scattering, and use a simple neural network

to convert from fur fibers' properties to scattering parameters.

Paper: PDF

Video: QT

|

|

Learning to Synthesize a 4D RGBD Light Field from a Single Image

ICCV 2017

We present a machine learning algorithm that takes as input a 2D RGB

image and synthesizes a 4D RGBD light field (color and depth of the

scene in each ray direction). For training, we introduce the largest

public light field dataset, consisting of over 3300 plenoptic camera

light fields of scenes containing flowers and plants.

Paper: PDF

Video: MPEG

Supplementary: PDF

|

|

Linear Differential Constraints for Photo-polarimetric Height Estimation

ICCV 2017

In this paper we present a differential approach to photo-polarimetric

shape estimation. We propose several alternative differential

constraints based on polarisation and photometric shading information

and show how to express them in a unified partial differential

system. Our method uses the image ratios technique to combine shading

and polarisation information in order to directly reconstruct surface

height, without first computing surface normal vectors.

Paper: PDF

|

|

Depth and Image Restoration from Light Field in a Scattering Medium

ICCV 2017

Traditional imaging methods and computer vision algorithms are often

ineffective when images are acquired in scattering media, such as

underwater, fog, and biological tissue. Here, we explore the use of

light field imaging and algorithms for image restoration and depth

estimation that address the image degradation from the medium.

We propose shearing and refocusing multiple views of the light field

to recovera single image of higher quality than what is possible from a

single view. We demonstrate the benefits of our method through

extensive experimental results in a water tank.

Paper: PDF

|

|

An Efficient and Practical Near and Far Field Fur Reflectance Model

SIGGRAPH 2017

We derive a compact BCSDF model for fur reflectance with only 5

lobes. Our model unifies hair and fur rendering, making it easy to

implement within standard hair rendering software. By exploiting

piecewise analytic integration, our method further enables a

multi-scale rendering scheme that transitions between near and

far-field rendering smoothly and efficiently for the first time.

Paper: PDF

Video: QT

Papers Trailer: Video

|

|

Light Field Video Capture Using a Learning-Based Hybrid Imaging System

SIGGRAPH 2017

We develop a hybrid imaging system, adding a standard video camera to

a light field camera to capture the temporal information. Given a 3

fps light field sequence and a standard 30 fps 2D video, our system

can then generate a full light field video at 30 fps. We adopt a

learning-based approach, which can be decomposed into two steps:

spatio-temporal flow estimation and appearance estimation,

enabling consumer light field videography.

Paper: PDF

Video: MPEG

Code/Data: Project

|

|

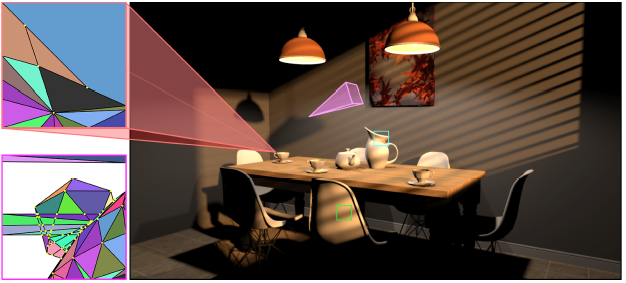

Patch-Based Optimization for Image-Based Texture Mapping

SIGGRAPH 2017

Image-based texture mapping is a common way of producing texture

mapsfor geometric models of real-world objects. We propose a novel

global patchbasedoptimization system to synthesize the aligned

images. Specifically, weuse patch-based synthesis to reconstruct a set

of photometrically-consistent aligned images by drawing information

from the source images. Our optimization system is simple, flexible,

and more suitable for correcting large misalignments than other

techniques such as local warping.

Paper: PDF

Video: MPEG

Supplementary: PDF

|

|

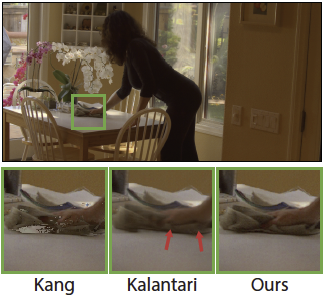

Deep High Dynamic Range Imaging of Dynamic Scenes

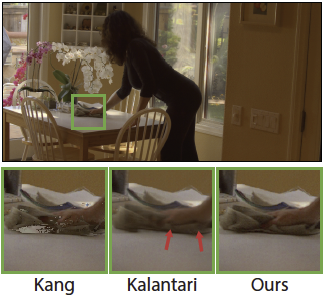

SIGGRAPH 2017

Producing a high dynamic range (HDR) image from a set of images with

different exposures is a challenging process for dynamic scenes.

We use a convolutional neural network (CNN) as our learning model and

present and compare three different system architectures to model the

HDR merge process. Furthermore, we create a large dataset of input LDR

images and their corresponding ground truth HDR images to train our

system.

Paper: PDF

Project Page: Code/Data

|

|

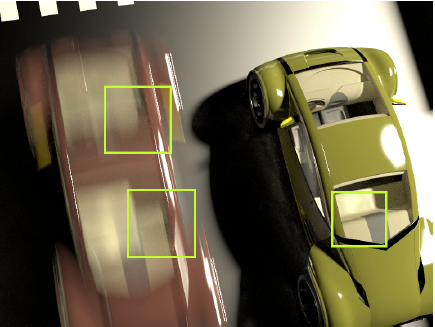

Light Field Blind Motion Deblurring

CVPR 2017

By analyzing the motion-blurred light field in

the primal and Fourier domains, we develop intuition into the effects

of camera motion on the light field, show the advantages of capturing

a 4D light field instead of a conventional 2D image for

motion deblurring, and derive simple methods of motion deblurring in

certain cases. We then present an algorithm to blindly deblur light

fields of general scenes without any estimation ofscene geometry.

Paper: PDF

|

|

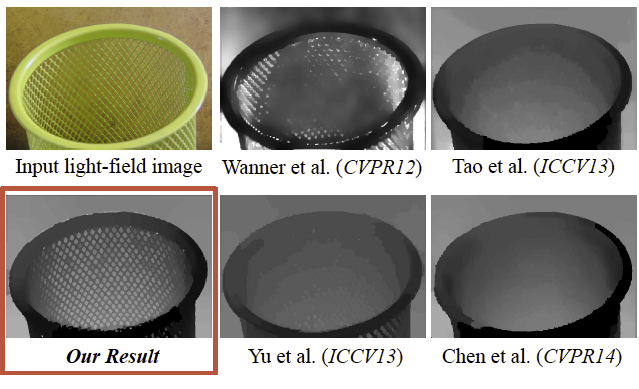

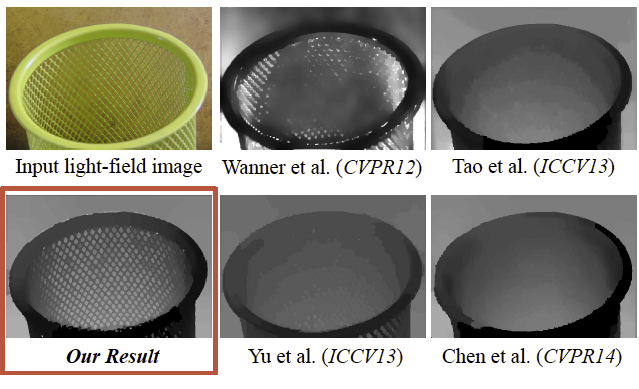

Robust Energy Minimization for BRDF-Invariant Shape from Light Fields

CVPR 2017

We present a variational energy minimization framework for robust

recovery of shape in multiview stereo with complex, unknown BRDFs. While

our formulation is general, we demonstrate its efficacy on shape

recovery using a single light field image, where the microlens array

may be considered as a realization of a purely translational multiview

stereo setup. Our formulation automatically balances contributions from

texture gradients, traditional Lambertian photoconsistency,

an appropriate BRDF-invariant PDE and a smoothness prior.

Paper: PDF

Supplementary

|

|

Gradient-Domain Vertex Connection and Merging

EGSR 2017

We present gradient-domain vertex connection and merging (G-VCM), a new

gradient-domain technique motivated by primal domain VCM. Our method

enables robust gradient sampling in the presence of complex transport,

such as specular-diffuse-specular paths, while retaining the denoising

power and fast convergence of gradient-domain bidirectional path

tracing.

Paper: PDF

|

|

Multiple Axis-Aligned Filters for Rendering of Combined Distribution Effects

EGSR 2017

We present a novel filter for efficient rendering of combined effects,

involving soft shadows and depth of field, with global (diffuse

indirect) illumination. We approximate the wedge spectrum with

multiple axis-aligned filters, marrying the speed of axis-aligned

filtering with an even more accurate (compact and tighter)

representation than sheared filtering.

Paper: PDF

Video: AVI

|

|

SVBRDF-Invariant Shape and Reflectance Estimation from a Light-Field Camera

PAMI 2017

Light-field cameras have recently emerged as a powerful tool for

one-shot passive 3D shape capture. However, obtaining the shape of

glossy objects like metals or plastics remains challenging, since

standard Lambertian cues like photo-consistency cannot be easily

applied. In this paper, we derive a spatially-varying

(SV)BRDF-invariant theory for recovering 3D shape and reflectance from

light-field cameras.

Paper: PDF

|

|

Antialiasing Complex Global Illumination Effects in Path-space

TOG 2017

We present the first method to efficiently predict antialiasing

footprints to pre-filter color-, normal-, and displacement-mapped

appearance in the context of multi-bounce global illumination. We

derive Fourier spectra for radiance and importance functions that allow

us to compute spatial-angular filtering footprints at path vertices.

Paper: PDF

|

|

Learning-Based View Synthesis for Light Field Cameras

SIGGRAPH Asia 2016

we propose a novel learning-based approach to synthesize new views

from a sparse set of input views for light field cameras.

We use two sequential

convolutional neural networks to model these two components and train

both networks simultaneously by minimizing the error between the

synthesized and ground truth images.

Paper: PDF

Video: MPEG

Dataset: Project Page

|

|

Minimal BRDF Sampling for Two-Shot Near-Field Reflectance Acquisition

SIGGRAPH Asia 2016

We develop a method to acquire the BRDF of a homogeneous flat sample

from only two images, taken by a near-field perspective camera, and lit

by a directional light source. We develop a mathematical framework to

estimate error from a given set of measurements, including the use of

multiple measurements in an image simultaneously, as needed for

acquisition from near-field setups.

Paper: PDF

Supplementary: PDF

Comparison Video: QT

|

|

Downsampling Scattering Parameters for Rendering Anisotropic Media

SIGGRAPH Asia 2016

Volumetric micro-appearance models have provided remarkably high-quality

renderings, but are highly data intensive and usually require tens of

gigabytes in storage. We introduce a joint optimization of single-scattering

albedos and phase functions to accurately downsample heterogeneous and

anisotropic media.

Paper: PDF

Video: MPEG

|

|

Photometric Stereo in a Scattering Medium

PAMI 2016

Photometric stereo is widely used for 3D reconstruction. However, its

use in scattering media such as water, biologicaltissue and fog has

been limited until now, because of forward scattered light from both

the source and object, as well as light scatteredback from the medium

(backscatter). Here we make three contributions to address the key

modes of light propagation, under thecommon single scattering

assumption for dilute media.

Paper: PDF

|

|

Linear Depth Estimation from an Uncalibrated, Monocular Polarisation Image

ECCV 2016

We present a method for estimating surface height directly from a

single polarisation image simply by solving a large, sparse system of

linear equations. To do so, we show how to express

polarisation constraints as equations that are linear in the unknown

depth. Our method is applicable to objects with uniform albedo

exhibiting diffuse and specular reflectance. We believe that

our method is the first monocular, passive shape-from-x technique that

enables well-posed depth estimation with only a single, uncalibrated

illumination condition.

Paper: PDF

|

|

A 4D Light-Field Dataset and CNN Architectures for Material Recognition

ECCV 2016

We introduce a new light-field dataset of materials, and take

advantage of the recent success of deep learning to perform material

recognition on the 4D light-field. Our dataset contains 12 material

categories, each with 100 images taken with a Lytro Illum. Since

recognition networks have not been trained on 4D images before, we

propose and compare several novel CNN architectures to train on

light-field images. In our experiments, the best performing CNN

architecture achieves a 7% boost compared with 2D image classification.

Paper: PDF

HTML Comparison

dataset (2D thumbnail)

Full Dataset (16GB)

|

|

Sparse Sampling for Image-Based SVBRDF Acquisition

Material Appearance Modeling Workshop, 2016

We acquire the data-driven spatially-varying (SV)BRDF of a flat sample

from only a small number of images (typically 20). We generalize the

homogenous BRDF acquisition work of Nielsen et al., who derived an

optimal minmal set of lighting/view directions. We demonstrate our

method on SVBRDF measurements of new flat materials, showing thatfull

data-driven SVBRDF acquisition is now possible from a sparse set of

only about 20 light-view pairs.

Paper: PDF

|

|

Position-Normal Distributions for Efficient Rendering of Specular Microstructure

SIGGRAPH 2016

Specular BRDF rendering traditionally approximates surface

microstructure using a smooth normal distribution, but this ignores

glinty effects, easily observable in the real world. We treat a

specular surface as a four-dimensional position-normal distribution,

and fit this distribution using millions of 4D Gaussians, which we

call elements. This leads to closed-form solutions to the required

BRDF evaluation and sampling queries, enabling the first practical

solution to rendering specular microstructure.

Paper: PDF Video:

MP4

Press: UCSD

PhysOrg

Digital Trends

Eureka Alert

Tech Crunch

|

|

Shape Estimation from Shading, Defocus, and Correspondence Using Light-Field

Angular Coherence

PAMI 2016

We show that combining all three sources of information: defocus,

correspondence, and shading, outperforms state-of-the-art light-field

depth estimation algorithms in multiple scenarios.

Paper: PDF

|

|

SVBRDF-Invariant Shape and Reflectance Estimation from Light-Field Cameras

CVPR 2016

Light-field cameras have recently emerged as a powerful tool for one-shot passive 3D shape capture. However, obtaining the shape of glossy objects like metals, plastics or ceramics remains challenging, since standard Lambertian cues like photo-consistency cannot be easily applied. In this paper, we derive a spatially-varying (SV)BRDF-invariant theory for recovering 3D shape and reflectance from light-field cameras.

Paper: PDF

|

|

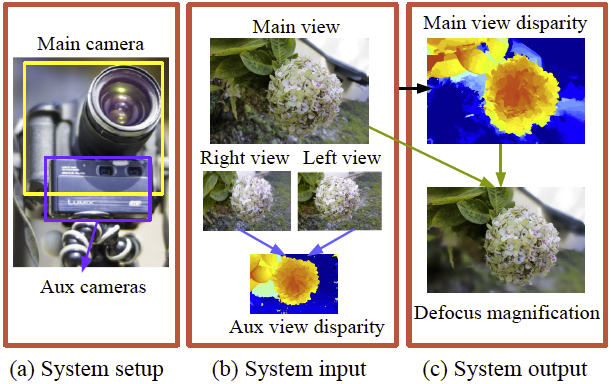

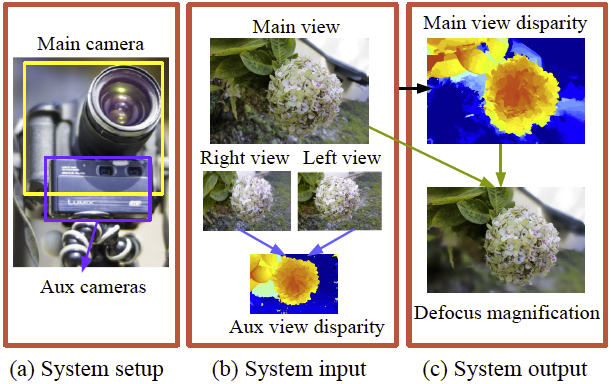

Depth from Semi-Calibrated Stereo and Defocus

CVPR 2016

In this work, we propose a multi-camera system where we combine a main

high-quality camera with two low-res auxiliary cameras. Our goal is,

given the low-res depth map from the auxiliary cameras, generate a

depth map from the viewpoint of the main camera. Ours is a

semi-calibrated system, where the auxiliary stereo cameras are

calibrated, but the main camera has an interchangeable lens, and is

not calibrated beforehand.

Paper: PDF

|

|

Depth Estimation with Occlusion Modeling Using Light-field Cameras

PAMI 2016

In this paper, an occlusion-aware depth estimation algorithm is

developed; the method also enables identification of occlusion

edges,which may be useful in other applications. It can be shown that

although photo-consistency is not preserved for pixels at occlusions,

it still holds in approximately half the viewpoints. Moreover, the line

separating the two view regions (occluded object vs. occluder) has

the same orientation as that of the occlusion edge in the spatial

domain. By ensuring photo-consistency in only the occluded view

region, depth estimation can be improved.

Paper: PDF

|

|

Fast 4D Sheared Filtering for Interactive Rendering of Distribution Effects

ACM Trans. Graphics Dec 2015

We present a new approach for fast sheared filtering on the GPU. Our

algorithm factors the 4D sheared filter into four 1D filters. We derive

complexity bounds for our method, showing that the per-pixel complexity

is reduced from O(n^2 l^2) to O(nl), where n is the linear filter width

(filter size is O(n^2)) and l is the (usually very small) number of

samples for each dimension of the light or lens per pixel (spp is

l2). We thus reduce sheared filtering overhead dramatically.

Paper: PDF

Video: MPEG

|

|

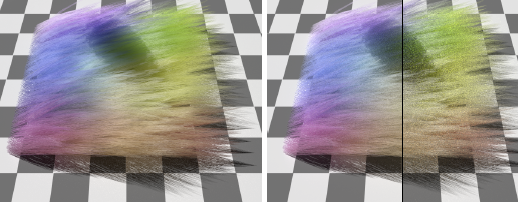

Physically-Accurate Fur Reflectance: Modeling, Measurement and Rendering

SIGGRAPH Asia 2015

In this paper, we develop a physically-accurate reflectance model for

fur fibers. Based on anatomical literature and measurements, we develop

a double cylinder model for the reflectance of a single fur fiber,

where an outer cylinder represents the biological observation of a

cortex covered by multiple cuticle layers, and an inner cylinder

represents the scattering interior structure known as the medulla.

Paper: PDF

MS thesis of Chiwei Tseng

Data

|

|

Anisotropic Gaussian Mutations for Metropolis Light Transport through

Hessian-Hamiltonian Dynamics

SIGGRAPH Asia 2015

We present a Markov Chain Monte Carlo(MCMC) rendering algorithm that

extends Metropolis Light Transport by automatically and explicitly

adapting to the local shape of the integrand, thereby increasing the

acceptance rate. Our algorithm characterizes the local behavior of

throughput in path space using its gradient as well as its Hessian. In

particular, the Hessian is able to capture the strong anisotropy of the

integrand.

Paper: PDF

|

|

On Optimal, Minimal BRDF Sampling for Reflectance Acquisition

SIGGRAPH Asia 2015

In this paper, we address the problem of reconstructing a measured BRDF

from a limited number of samples. We present a novel mapping of the

BRDF space, allowing for extraction of descriptive principal components

from measured databases, such as the MERL BRDF database. We optimize

for the best sampling directions, and explicitly provide the optimal

set of incident and outgoing directions in the Rusinkiewicz

parameterizationfor n = 1; 2; 5; 10; 20 samples. Based on the

principal components, we describe a method for accurately

reconstructing BRDF data from these limited sets of samples.

Paper: PDF

|

|

Photometric Stereo in a Scattering Medium

ICCV 2015

Photometric stereo is widely used for 3D reconstruction. However, its

use in scattering media such as water, biological tissue and fog has

been limited until now, because of forward scattered light from both

the source and object, as well as light scattered back from the medium

(backscatter). Here we make three contributions to address the key

modes of light propagation, under the common single

scattering assumption for dilute media.

Paper: PDF

|

|

Occlusion-aware Depth Estimation Using Light-field Cameras

ICCV 2015

In this paper, we develop a depth estimation algorithm for light field

cameras that treats occlusion explicitly; the method also

enables identification of occlusion edges, which may be useful in other

applications. We show that, although pixels at occlusions do not

preserve photo-consistency in general, they are still consistent in

approximately half the viewpoints.

Paper: PDF

|

|

Oriented Light-Field Windows for Scene Flow

ICCV 2015

For Lambertian surfaces focused to the correct depth, the 2D

distribution of angular rays from a pixel remains consistent. We build

on this idea to develop an oriented 4D light-field window that accounts

for shearing(depth), translation (matching), and windowing. Our

main application is to scene flow, a generalization of optical flow to

the 3D vector field describing the motion of each point in the scene.

Paper: PDF

|

|

Depth Estimation and Specular Removal for Glossy Surfaces Using Point and Line Consistency with Light-Field Cameras

PAMI 2015 (to appear)

Light-field cameras have now become available in both consumer and

industrial applications, and recent papers havedemonstrated practical

algorithms for depth recovery from a passive single-shot

capture. However, current light-field depth estimationmethods are

designed for Lambertian objects and fail or degrade for glossy or

specular surfaces. In this paper, wepresent a novel theory of the

relationship between light-field data and reflectance from the

dichromatic model.

Paper: PDF

|

|

Depth from Shading, Defocus, and Correspondence Using Light-Field Angular Coherence

CVPR 2015

Using shading information is essential to improve shape estimation

from light field cameras. We develop an improved technique for local

shape estimation from defocus and correspondence cues, and show how

shading can be used to further refine the depth. We show that the angular

pixels have angular coherence, which exhibits three properties: photoconsistency, depth consistency, and shading consistency.

Paper: PDF

|

|

Probabilistic Connections for Bidirectional Path Tracing

Computer Graphics Forum (EGSR) 2015

Bidirectional path tracing (BDPT) with Multiple Importance Sampling is

one of the most versatile unbiased rendering algorithms today. BDPT

repeatedly generates sub-paths from the eye and the lights, which are

connected for each pixel and then discarded. Unfortunately, many such

bidirectional connections turn out to have low contribution to the

solution. Our key observation is that we can importance sample

connections to an eye sub-path by considering multiple light sub-paths

at once and creating connections probabilistically.

Paper: PDF

|

|

Filtering Environment Illumination for Interactive Physically-Based Rendering in Mixed Reality

EGSR 2015

We propose accurate filtering of a noisy Monte-Carlo image using

Fourier analysis. Our novel analysis extends previous works by showing

that the shape of illumination spectra is not always a line or wedge,

as in previous approximations, but rather an ellipsoid. Our primary

contribution is an axis-aligned filtering scheme that preserves the

frequency content of the illumination.We also propose a novel

application of our technique to mixed reality scenes, in which virtual

objects are inserted into a real video stream so as to become

indistinguishable from the real objects.

Paper: PDF

Video: MP4

Supplementary: PDF

|

|

Recent Advances in Adaptive Sampling and Reconstruction for Monte Carlo Rendering

EUROGRAPHICS 2015

In this paper we survey recent

advances in adaptive sampling and reconstruction. We distinguish between a priori

methods that analyze the light transport equations and derive sampling

rates and reconstruction filters from this analysis, and a posteriori

methods that apply statistical techniques to sets of samples.

Paper: PDF

|

|

A Light Transport Framework for Lenslet Light Field Cameras

ACM Transactions on Graphics (Apr 2015).

It is often stated that there is a fundamental tradeoff

between spatial and angular resolution of lenslet light field cameras,

but there has been limited

understanding of this tradeoff theoretically or numerically.

In this paper, we

develop a light transport framework for understanding the fundamental

limits of light field camera resolution.

Paper: PDF

Supplementary Images: PDF

|

|

City Forensics: Using Visual Elements to Predict Non-Visual City Attributes

IEEE TVCG [SciVis 2014]. Honorable Mention for Best Paper Award

We present a method for automatically identifying and validating

predictive relationships between the visual appearance of a city and

its non-visual attributes (e.g. crime statistics, housing prices,

population density etc.). We also test human performance for

predicting theft based on street-level images and show that our

predictor outperforms this baseline with 33% higher accuracy on average.

Paper: PDF

|

|

High-Order Similarity Relations in Radiative Transfer

SIGGRAPH 2014.

Radiative transfer equations (RTEs) with different scattering

parameters can lead to identical solution radiance fields. Similarity

theory studies this effect by introducing a hierarchy of equivalence

relations called similarity relations. Unfortunately, given a set of

scattering parameters, it remains unclear how to find altered ones

satisfying these relations, significantly limiting the theory's

practical value. This paper presents a complete exposition of

similarity theory, which provides fundamental insights into the

structure of the RTE's parameter space. To utilize the theory in its

general high-order form, we introduce a new approach to solve for the

altered parameters including the absorption and scattering

coefficients as well as a fully tabulated phase function.

Paper: PDF

Video (MPEG)

|

|

Rendering Glints on High-Resolution Normal-Mapped Specular Surfaces

SIGGRAPH 2014.

Complex specular surfaces under sharp point lighting show a

fascinating glinty appearance, but rendering it is an unsolved

problem. Using Monte Carlo pixel sampling for this purpose is

impractical: the energy is concentrated in tiny highlights that take up

a minuscule fraction of the pixel. We instead compute an accurate

solution using a completely different deterministic approach.

Paper: PDF

Video (MPEG)

|

|

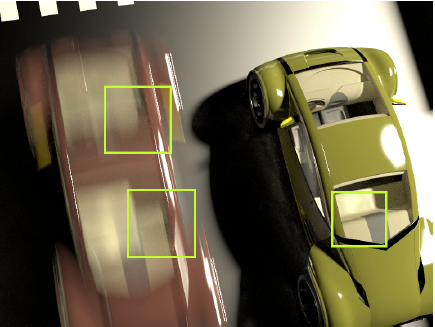

Factored Axis-Aligned Filtering for Rendering Multiple Distribution Effects

SIGGRAPH 2014.

We propose an approach to adaptively sample and filter for

simultaneously rendering primary (defocus blur) and secondary

(soft shadows and indirect illumination) distribution effects, based on

a multi-dimensional frequency analysis of the direct and indirect

illumination light fields, and factoring texture and irradiance.

Paper: PDF

Video (MPEG)

|

|

Discrete Stochastic Microfacet Models

SIGGRAPH 2014.

This paper investigates rendering glittery surfaces, ones which

exhibitshifting random patterns of glints as the surface or

viewermoves. It applies both to dramatically glittery surfaces that

containmirror-like flakes and also to rough surfaces that exhibit more

subtlesmall scale glitter, without which most glossy surfaces

appeartoo smooth in close-up. Inthis paper we present a stochastic

model for the effects of randomsubpixel structures that generates

glitter and spatial noise that behavecorrectly under different

illumination conditions and viewingdistances, while also being

temporally coherent so that they lookright in motion.

Paper: PDF

Video (MPEG)

|

|

Depth Estimation for Glossy Surfaces with Light-Field Cameras

ECCV 14 Workshop Light Fields Computer Vision.

Light-field cameras have now become available in both consumer

and industrial applications, and recent papers have demonstrated

practical algorithms for depth recovery from a passive single-shot

capture. In this paper, we develop an iterative approach to use the

benefits of light-field data to estimate and remove the specular

component, improving the depth estimation. The approach enables

light-field data depth estimation to support both specular and diffuse

scenes.

Paper: PDF

|

|

User-Assisted Video Stabilization

EGSR 2014.

We present a user-assisted video stabilization algorithm that is able

to stabilize challenging videos. First, we cluster

tracks and visualize them on the warped video. The user ensures that

appropriate tracks are selected by clicking on track clusters to

include or exclude them. Second, the user can directly specify how

regions in the output video should look by drawing quadrilaterals to

select and deform parts of the frame.

Paper: PDF

Video (MPEG)

|

|

Depth from Combining Defocus and Correspondence Using Light-Field Cameras

ICCV 2013.

Light-field cameras have recently become available to the consumer

market. An array of micro-lenses captures enough information that one

can refocus images after acquisition, as well as shift one's viewpoint

within the sub-apertures of the main lens, effectively obtaining

multiple views. Thus, depth cues from both defocus and

correspondence are available simultaneously in a single capture, and we show

how to exploit both by analyzing the EPI.

Paper: PDF

Video (MPEG)

|

|

External mask based depth and light field camera

ICCV 13 Workshop Consumer Depth Cameras for Vision.

We present a method to convert a digital single-lens reflex (DSLR)

camera into a high-resolution consumer depth and light-field camera by

affixing an external aperture mask to the main lens. Compared to the

existing consumer depth and light field cameras, our camera is easy to

construct with minimal additional costs, and our design is camera and

lens agnostic. The main advantage of our design is the ease of switching